Exporting adaptive model data for external analysis

4 Tasks

25 mins

Scenario

U+ Bank implements cross-selling of their credit cards on the web by using Pega Customer Decision Hub™. Self-learning, adaptive models drive the predictions that support the Next Best Actions for each customer. In the production phase of the project, you can export the data from the Adaptive Decision Management datamart to further analyze the performance of your online models over time, across channels, issues, and groups, in your external data analysis tool of choice.

To limit the scope and size of the data set, you can modify the data flows that export the data files. In this way, you can select the data for the models that interest you.

Use the following credentials to log in to the exercise system:

| Role | User name | Password |

|---|---|---|

| Data scientist | DataScientist | rules |

Your assignment consists of the following tasks:

Task 1: Generate the monitoring export artifacts

In the Prediction Studio settings, configure the Monitoring database export to generate the monitoring export artifacts.

Task 2: Modify the generated ModelSnapshot data flow

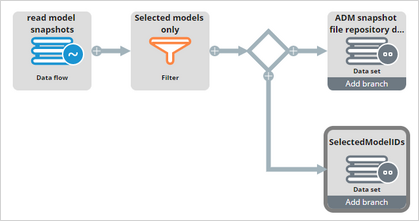

Add a filter component to the ModelSnapshot data flow to select model snapshots for the Web Click Through Rate model. Create a data set that has .pyModelID as the only key, and then use this data set as a second destination in the ModelSnapshot.

Note: You create an extra data set to select the appropriate predictor snapshots at a later stage.

Task 3: Modify the generated PredSnapshot data flow

Add a Merge component to the PredSnapshot data flow to select predictor binning snapshots for the Web Click Through Rate model.

Task 4: Export the monitoring database

In Prediction Studio, export the monitoring database and examine the content of the files.

Challenge Walkthrough

Detailed Tasks

1 Generate the monitoring export artifacts

- On the exercise system landing page, click Pega Infinity™ to log in to Customer Decision Hub.

- Log in as a data scientist:

- In the User name field, enter DataScientist.

- In the Password field, enter rules.

- In the navigation pane of Customer Decision Hub, click Intelligence > Prediction Studio to open Prediction Studio.

- In the navigation pane of Prediction Studio, click Settings > Prediction Studio settings to navigate to the settings page.

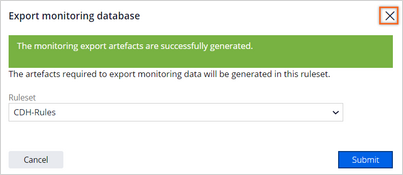

- Scroll down to the Monitoring database export section, and then click Configure to open the Export monitoring database dialog box.

- In the Export monitoring database dialog box, set the Ruleset to CDH-Rules, and then click Submit to generate the monitoring export artifacts.

- Close the Export monitoring database dialog box.

Note: These monitoring export artifacts are typically generated in a development environment and deployed to higher environments through the enterprise pipeline.

- In the lower-left corner, click Back to Customer Decision Hub.

2 Modify the generated ModelSnapshot data flow

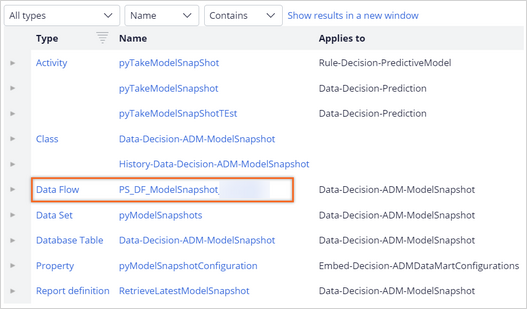

- In the search field, enter ModelSnapshot, and then press the Enter key to search for the ModelSnapshot data flow.

- In the search results, click the ModelSnapshot data flow to open the data flow canvas.

Note: The name of the data flow contains a random identifier that is created during this exercise.

- In the upper-right corner, click Check out.

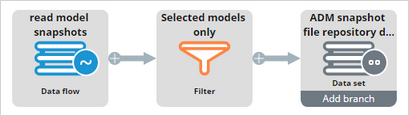

- Click the Add icon, and then click Filter to add a filter component to the data flow.

- Right-click the filter component, and then click Properties to configure the component.

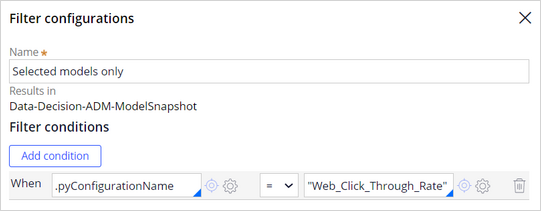

- In the Filter configurations dialog box, in the Name field, enter Selected models only.

- In the Filter conditions section, click Add condition.

- Enter the following condition: When .pyConfigurationName = "Web_Click_Through_Rate".

- Click Submit to close the Filter configurations dialog box.

- In the navigation pane of Customer Decision Hub, click Intelligence > Prediction Studio to open Prediction Studio.

- In the navigation pane of Prediction Studio, click Data > Data sets to navigate to the data sets landing page.

- In the upper-right corner, click New to configure a new data set.

- In the Name field, enter SelectedModelIDs.

- In the Type field, select Decision Data Store.

- In the Apply to field, select Data-Decision-ADM-ModelSnapshot.

- In the Add to ruleset field, select CDH-Rules.

- Click Create to configure the data set.

Note: You create the SelectedModelIDs data set to select the appropriate predictor snapshots in the next task.

- In the Keys section, delete the .pySnapshotTime and .pxApplication fields.

- In the upper-right corner, click Save.

- In the lower-left corner, click Back to Customer Decision Hub.

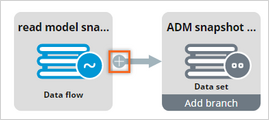

- On the ADM snapshot file repository data set destination component of the data flow, click Add branch.

- Right-click the new destination tile, and then click Properties to configure the component:

- In the Output data to section, select Data set as the destination.

- In the Data set field, enter or select SelectedModelIDs.

- Click Submit to close the dialog box.

- In the upper-right corner, click Check in.

- In the Check in dialog box, enter appropriate comments, and then click Check in.

3 Modify the generated PredSnapshot data flow

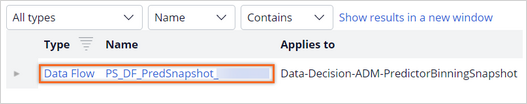

- In the search field, enter PredSnapshot, and then press Enter to search for the PredSnapshot data flow.

- In the search results, click the PredSnapshot data flow to open the data flow canvas.

Note: The name of the data flow contains a random identifier that is created during this exercise.

- In the upper-right corner, click Check out.

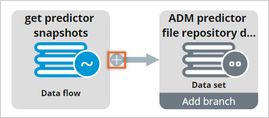

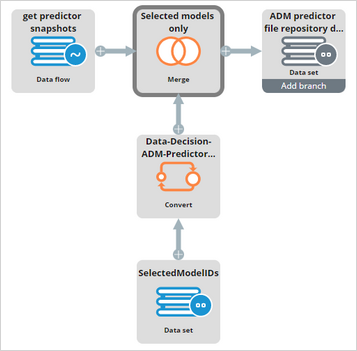

- Click the Add icon, and then click Merge to add a second source component.

- Right-click the second source component, and then click Properties to configure the component:

- In the Source configurations dialog box, in the Source field, select Data set.

- In the Input class field, enter or select Data-Decision-ADM-ModelSnapshot.

- In the Data set field, enter or select SelectedModelIDs.

- Click Submit to close the dialog box.

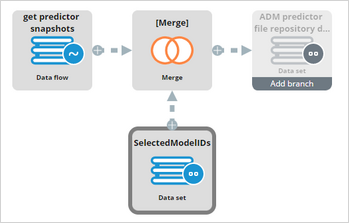

- Click the Add icon on the SelectedModelIDs component, and then click Convert.

- Right-click the Convert component, then click Properties to configure the component.

- In the Convert configurations section, in the Into page(s) of class field, enter or select Decision-ADM-PredictorBinningSnapshot.

- In the Field mapping section, click Add mapping.

- In the Target field, enter or select .pyModelID.

- In the Source field, enter or select .pyModelID.

- Click Submit to close the dialog box.

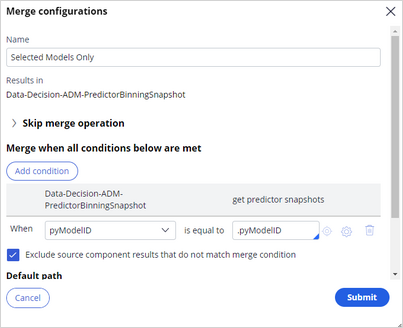

- Right-click the Merge component, then click Properties to configure the component:

- In the Name field, enter Selected models only.

- In the Merge when all conditions below are met section, in the Data-Decision-ADM-PredictorBinningSnapshot field, select pyModelID.

- In the get predictor snapshots field, enter or select .pyModelID.

- Select Exclude source component results that do not match merge condition.

- Click Submit to close the dialog box.

- In the upper-right corner, click Check in.

- In the Check in dialog box, enter appropriate comments, and then click Check in.

4 Export the monitoring database

- In the navigation pane of Customer Decision Hub, click Intelligence > Prediction Studio to open Prediction Studio.

- In the upper-right corner, click Actions > Export > Export monitoring database.

- Click Export to start the process.

Note: This action is typically done in a Business Operations Environment where the adaptive models represent the production data. For the purpose of this exercise for testing purposes in general, you can also run this export in a development environment such as the exercise system used for this challenge.

- In the upper-right corner, click Notifications to confirm that the export initialized and completed.

- On the exercise system landing page, click File Browser to open the repository.

- Log in to the repository:

- In the Username field, enter pega-filerepo.

- In the Password field, enter pega-filerepo.

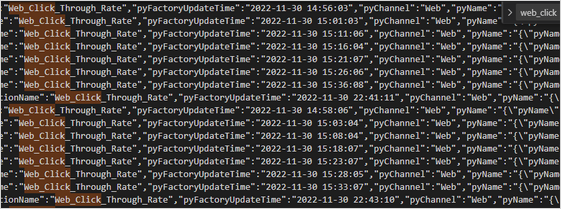

- Open the datamart folder, and then open the ZIP file that starts with Data-Decision-ADM-Mod.

- Click Download to download the ZIP file to your local system, and then extract the Data-Decision-ADM-ModelSnapshot file.

Tip: The format of the ZIP file is compatible with the 7-Zip tool, but not some other compression tools.

- Confirm that the Data-Decision-ADM-ModelSnapshot file only contains the model snapshots for the adaptive models based on the Web Click Through Rate model. configuration.

- Return to the File Browser and extract the Data-Decision-ADM-PredictorBinningSnapshot file to inspect the predictor binning snapshots.

Note: The model snapshot and predictor binning snapshot files can be used for analysis in external tools.

Available in the following mission:

If you are having problems with your training, please review the Pega Academy Support FAQs.

Want to help us improve this content?