Configuring an adaptive model

U+Bank wants to use Pega Customer Decision Hub™ to show a personalized credit card offer in a web banner when a customer logs in to their website. Customer Decision Hub uses AI to arbitrate between the offers for which a customer is eligible.

In the implementation phase of the project, enhance the prediction that drives the decision of which banner to show to a customer. Add behavioral data and model scores as potentially relevant predictors to the adaptive model configuration that drives the prediction.

Video

Transcript

U+Bank wants to use Pega Customer Decision Hub™ to show a personalized credit card offer in a web banner when a customer logs in to their website. Customer Decision Hub uses the Predict Web Propensity prediction to arbitrate between the offers for which a customer is eligible.

In the implementation phase, a data scientist configures the potential predictors of the Web Click Through Rate adaptive model configuration that drives the Predict Web Propensity prediction.

Predictors can be one of two types: numeric or symbolic. The system uses the property type as the default predictor type during the initial setup, but you can change the predictor type. For example, when you know a numeric predictor has a small number of distinct values, such as when the contract duration is either 12 or 24 months, change the predictor type from numeric to symbolic.

Remember that changing the predictor type effectively means removing and then adding a predictor. As a best practice, make these changes during the implementation phase of a project, as there is no way to retain previous responses to the predictor.

You can enhance adaptive models by adding many additional fields as potential predictors. The models decide which ones to use. Additional predictors can include customer behavior, contextual information, and past interactions with the bank. The FSClickstream page represents customer behavioral data that the system architect recently introduced.

You can also add model scores that third-party models generate. A system architect sets up a new entity with the scores as properties and makes it available to you. You can then include the model scores as potential predictors.

You can make input fields that are not directly available in the customer data model accessible to the models by configuring these fields as parameterized predictors. For example, a predictive model generates a model score real time, or a calculation occurs in a decision strategy.

The Interaction History data set captures the customer responses. Aggregated fields from Interaction History summaries are automatically provided to the models as predictors. Interaction History summaries leverage historical customer interactions to improve the predictions.

An example of a predictor is the group of the most recently accepted offer in the contact center.

Adaptive Decision Manager updates the adaptive models every hour to process the latest responses.

You can save historical data for offline analysis in a repository.

The default values for the advanced settings in an adaptive model follow best practices. Only a highly experienced data scientist changes the default values.

By default, the system uses all received responses for each update cycle, which suits most use cases. The option to use a subset of responses assigns additional weight to recent responses and increasingly less weight to older responses when Adaptive Decision Manager updates the models. The Monitor performance for the last field determines the number of weighted responses that the model performance calculation uses for monitoring purposes. The default setting is 0, which means that the calculation uses all historical data.

Additional parameters determine the binning of the responses.

The Grouping granularity field determines the granularity of the predictor binning. A higher value results in more bins. The Grouping minimum cases field determines the minimum fraction of cases for each interval. The default setting is 5 percent of the cases. Together, these two settings control the grouping of predictors by influencing the number of bins. A higher number of bins might increase the performance of the model, but the model might also become less robust.

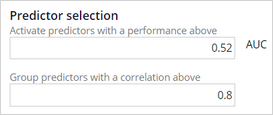

The system activates predictors that perform above a threshold. Over time, the system dynamically activates or deactivates the predictors when they cross the threshold.

The area under the curve (or AUC) is a measure of the model performance of the predictor, and it tells how well the predictor can distinguish between classes. The minimum value is 0.5, so the value of the performance threshold should always be above 0.5. The system considers pairs of predictors with a mutual correlation above a threshold as similar, groups them, and uses only the best predictor in a group for adaptive learning.

You have reached the end of this video. What did it show you?

- How to configure additional potential predictors for an adaptive model.

- How to change the predictor type of a field.

- Which type of predictors you can use for an adaptive model.

- When the system updates an adaptive model.

- What advanced settings you can configure for an adaptive model.

This Topic is available in the following Module:

If you are having problems with your training, please review the Pega Academy Support FAQs.

Want to help us improve this content?