Batch import data jobs

Streamline the data ingestion process with the data jobs in Pega Customer Decision Hub™. By using data jobs, you have a standardized and defined way to import data into Customer Decision Hub. You can also monitor the progress of data jobs with the Customer Profile Designer.

Creating a new batch import data job

The U+ Bank technical team finished the data mapping workshop and is now ready to ingest customer data into the Pega Customer Decision Hub. You use batch import data Jobs to ingest customer data into a target customer profile designer data source. This process typically runs on a nightly basis, or on-demand when new data is available.

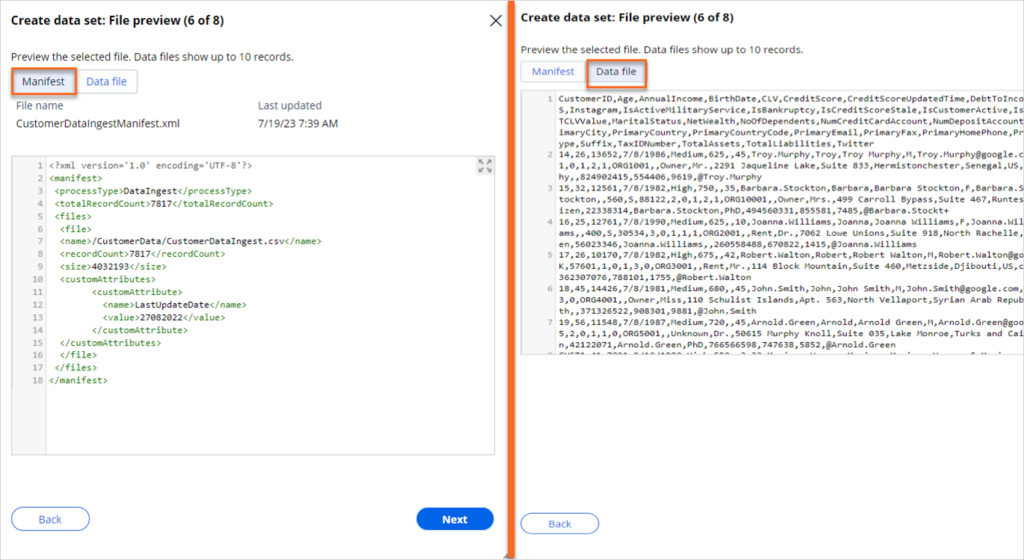

In this demo, the customer data is in a CSV format, located in the Amazon S3 file repository. The first row contains the customer properties, and the remaining 7,817 rows contain the actual values that are set for ingestion into the target data source. The file has a size of 4,032,193 bytes.

Along with the CSV file, the system uses a manifest file, which has details about the process type, total record count, and relative file path to the CustomerDataIngest.csv file.

To access a repository, you define a Repository rule in Pega Platform™. Note that the Root path defined in the repository is /DataJobs/. Ensure that the file path in the manifest file is relative to this path. In this case, the Customer files are in the /DataJobs/CustomerData/ folder of an Amazon S3 repository, pegaenablement-assets bucket.

To create a new data job, you need the CanUpdateJobConfiguration privilege in your Access Group.

In App Studio, you can define the general settings for the import data jobs. These settings define the retention duration of archived files and import runs, and import run wait times when the files are not available in the file repository.

Data ingestion is divided into two main tasks:

- Defining a data set to read from the source.

- Defining the data job to ingest the source data to its destination.

To access the source data in a repository, define a new file data set from the Profile Data Sources in Customer Decision Hub.

Give the file data set a name, select the source type, and enter the Apply to class.

Then select the repository that contains the data to import.

Now, browse the repository to select the file or location that contains the data. When creating the file data set for the first time, load the manifest and data file in the repository as a best practice. This enables you to map columns in the CSV files to the properties in the data model in the Field mapping step.

You can import data with a manifest file (XML), compressed file (GZIP, ZIP), or data file (CSV, JSON). To import multiple files with similar names, you can use a wildcard character (*) to define a file name pattern. For example, Folder/File*.csv or a file path that might not exist in the repository.

Next, define the structure of the data in a CSV file, and then configure additional details.

Once you configure the file details, you can view the information about the manifest and data file on the Data file tab.

Continue to map the columns that are defined in the CSV file to the fields in the data model of the target data source.

Confirm that all settings are correct for the file data set.

Now that the source is ready, configure the data job to ingest the data from the source to its destination.

To define a new data job, navigate to the Data Jobs landing page in Customer Decision Hub.

Begin by selecting the target data source to which the data will be ingested to. A target data source is one of the data sources that are part of the Customer Insights Cache, for example, Customer or Account. You can define one or more data jobs for each data source.

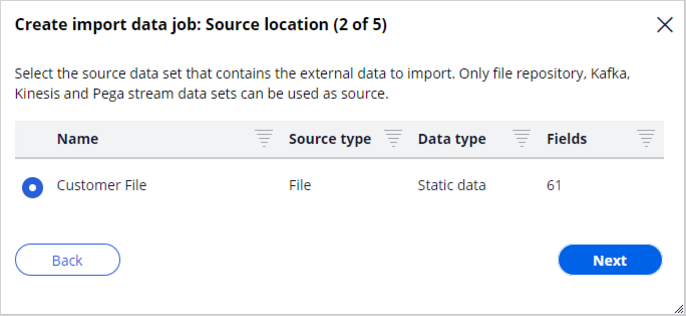

Then select the source data set that contains the data to ingest.

The import data jobs automatically detect a token file in the file repository folder to begin the ingestion process or follow a schedule to process the files at a given time and frequency.

For now, select the File detection trigger type.

Each run of a data job tracks and monitors the number of records that fail to process as a result of errors. The run fails when there are more failed records than the defined error threshold.

In the final step, confirm that all the settings are correct for the data job.

Now, you see the new Import data job with the File detection trigger on the Data Jobs landing page. The ingestion process begins when the system detects a token file as defined in the data job.

Everything is ready, and the data job continuously monitors the Amazon S3 repository for the .TOK file. For the purposes of this demo, the .TOK file is created manually.

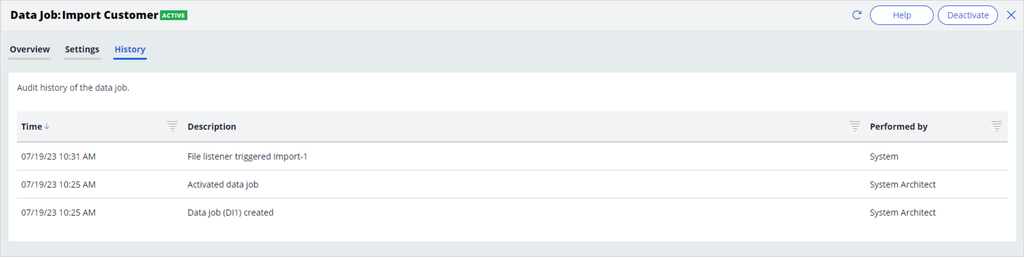

After the file listener detects the token file, the data ingestion begins.

On the Overview tab of the data job, you can see the number of processed records, which includes successful records, failed records, and the final status.

Navigate to the targeted profile data source. On the Records tab, you can see the ingested data.

The Data Jobs tab lists all active and inactive data jobs for this data source. An operator with the PegaMarketing_Core:DataJobsObservability access role can view the progress and status on the Data Jobs tab.

Double-click the data job to view its properties. The type of the data job, the trigger method, the target profile data source, and the manifest file details are displayed. Each run and its details are available in the Runs section. You can click a run to see an overview and a detailed audit history of the completed stages.

On the Settings tab, you can make changes to the configuration of a data job.

Once the process completes, the system stores the files in a dedicated Archive location along with the <Data> and <RunID>, and the processed data and manifest files move to this location.

The Source data set is available in the Source section. It holds the file data set configuration and, in the case of CSV files, the field mappings.

The rules that the system generates for the data job are accessible in the Supporting Artifacts section.

The Ingestion staging data flow is used for record validation. Records are parsed, optionally validated, and then immediately discarded. The system verifies the following two items when the data flow completes:

- Whether the number of failures exceeds the configured value.

- Whether the total number of processed records matches the total record number in the manifest file.

You can create a pyRecordValidation activity in the class of the data job to add additional validations.

After the successful completion of the record validation, the system triggers the Ingestion data flow to process the data to the target destination. The Sub data flow component in the ingestion data flow is available as an extension point to the ingestion process.

Like the ingestion artifacts, the system creates the Deletion staging data flow and Deletion data flow if you want to delete records from the target data source. The manifest file determines which data flow to trigger. When the processType is DataDelete, the system uses the records in the CSV file to delete the records in the target data source.

Each data import job has one File Listener instance. The file listener is active as long as the data import job is active.

The system also creates a Service File and a Service Package that are associated with the file listener.

On the History tab, the audit history of the data job is displayed.

You can deactivate a data job at any time.

The system enables all data job alerts by default. To receive or opt out of notifications about events in the data jobs, for example, completed and failed runs that might require your attention, adjust the Notification preferences in Customer Decision Hub.

Challenge

Tip: To practice what you have learned in this topic, consider taking the Setting up data ingestion challenge.

This Topic is available in the following Module:

If you are having problems with your training, please review the Pega Academy Support FAQs.

Want to help us improve this content?