Queue processors and job schedulers

Pega Platform™ supports several options for background processing. You can use queue processors, job schedulers, standard and advanced agents, service-level agreements (SLAs), wait shapes, and listeners to design background processing in your application.

Note: Use job scheduler and queue processor rules instead of agents for better scalability, ease of use, and faster background processing.

Queue processor

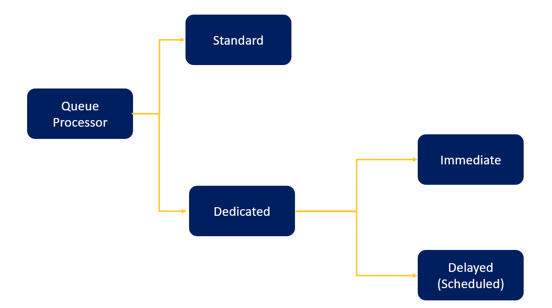

A queue processor rule is an internal background process that you configure for queue management and asynchronous message processing. Use standard queue processor rules for simple queue management or low-throughput scenarios, or use dedicated queue processor rules for higher-scaling throughput and customized or delayed processing of messages.

Pega Platform™ provides many default standard queue processors; the system triggers pzStandardProcessor internally to queue messages when you select Standard in the Queue-For-Processing method or the Run in background shape. This queue processor is an Immediate type, and you cannot delay the processing.

The following diagram shows the types of queue processors. By default, Pega Platform includes standard and dedicated queue processors; there are two types of dedicated queue processors: immediate or delayed.

By using a queue processor rule, you can focus on configuring specific operations that occur in the background. Pega Platform provides built-in features for error handling, queuing, and dequeuing and can conduct conditional commits when using a queue processor. Applications that stem from a common framework or Pega Platform often use queue processors.

All queue processors are rule-resolved against the context that is specified in the System Runtime Context. By default, Pega Platform adds applications in System Runtime Context; you can update as required. When configuring the Queue-For-Processing method in an activity, or the Run in background shape in a stage, it is possible to specify an alternate access group. It is also possible for the activity that the queue processor runs to change the access group. An example is the pzInitiateTestSuiteRun activity that the pzInitiateTestSuiteRun queue processor runs.

Use standard queue processors for simple queue management or dedicated queue processors for customized or delayed message processing. If you define a queue processor as delayed, define the date and time while calling through the Queue-For-Processing method or by using the Run in background smart shape.

Queues are multi-threading and shared across all nodes. Each queue processor can process messages across six partitions so that queue processor rules can support up to 20 separate processing threads simultaneously without conflict. Leveraging multiple queue processors on separate nodes to process the items in a queue can also improve throughput.

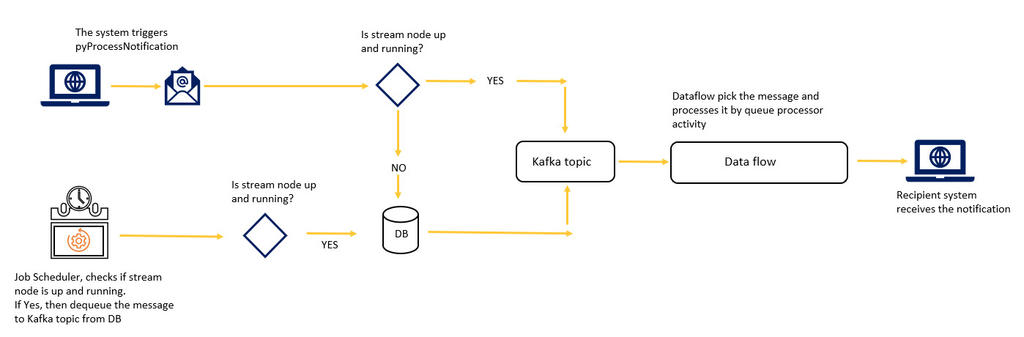

For example, suppose you want to send a notification. You can use pyProcessNotifcation queue processor, as shown in the following figure. Whenever users queue a message to a queue processor based on the availability of the stream node, the system queues the message to a database or Kafka topic folder. The data flow work object that corresponds to the queue processor selects the message from a partition in the Kafka topic list, and then pushes it to the queue processor activity to process the message, which sends a notification. A job scheduler runs periodically to check if the stream node is running. If so, the system pushes all the delayed messages to the Kafka topic.

Performance has two dimensions: time to process a message and total message throughput. You can improve performance by using the following actions:

- Optimize the activity to reduce the time to process a message. The time to process a message depends on the amount of work that the processing activity performs.

- Enhance total message throughput:

- Scale out by increasing the number of processing nodes. This setting applies only to on-premises users. For Pega Cloud® Services, you require a larger sandbox.

- Scale up by increasing the number of threads per node (up to 20 threads per cluster).

As soon as you run a queue processor, the system creates a topic that corresponds to this queue processor in the Kafka server. Based on the number of partitions mentioned in the server.properties file, the system creates the same number of folders in the tomcat\Kafka-data folder.

At least one stream node is necessary for the system to queue messages to the Kafka server. If you do not define a stream node in a cluster, the system queues items to the database, and then these items are processed when a stream node is available.

Default queue processors

Pega Platform provides three default queue processors:

- pyProcessNotification

- pzStandardProcessor

- pyFTSIncrementalIndexer

pyProcessNotification

The pyProcessNotification queue processor sends notifications to customers and runs the pxNotify activity to calculate the list of recipients, the message, or the channel. The possible channels include an email, a gadget notification, or a Push notification.

pzStandardProcessor

You can use the pzStandardProcessor queue processor for standard asynchronous processing when:

- Processing does not require high throughput, or processing resources can have slight delays.

- Default and standard queue behaviors are acceptable.

This queue processor is useful for tasks such as submitting each status change to an external system. You can use pzStandardProcessor to run bulk processes in the background. When the queue processor resolves all the items from the queue, you receive a notification about the number of successful and failed attempts.

pyFTSIncrementalIndexer

The pyFTSIncrementalIndexer queue processor performs incremental indexing in the background. This queue processor posts rule, data, and work objects into the search subsystem as soon as you create or change them, which helps keep search data current and closely reflects the content of the database.

Pega Platform contains search and reporting service features for use on dedicated, backing service nodes. Backing nodes are supporting nodes and separate from the Pega Platform service nodes.

The system indexes data by using the following queue processors:

- pySASBatchIndexClassesProcessor

- pySASBatchIndexProcessor

- pySASIncrementalIndexer

Job scheduler

Use a job scheduler rule when there is no requirement to queue a reoccurring task. Unlike queue processors, the job scheduler must decide which records to process and establish each record’s step page context before working on that record. For example, suppose you need to generate statistics every midnight for reporting purposes. In that case, the output of a report definition can determine the list of items to process. The job scheduler then operates on each item in the list.

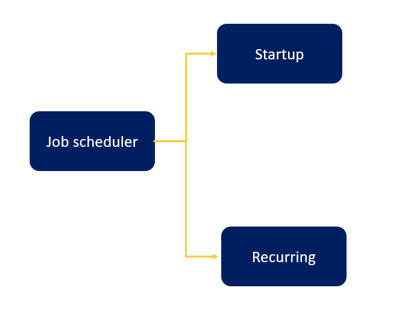

The following diagram shows how job schedulers are rule-resolved against the context in the System Runtime Context or how you can configure job schedulers to run against any specific access group. For example, you can configure a job scheduler to run on startup (only when All associated nodes is selected), on a periodic basis (such as daily, weekly, monthly, or yearly), or on a recurring basis (such as multiple times a day).

If an activity requires a specific context, you can select Specify access group to provide the access group. For example, if the job scheduler requires a manager role, you can specify a manager access group.

If an activity requires the System Runtime Context (for example, use the same context for the job scheduler and activity resolution), then select the Use System Runtime Context on the rule form of the job scheduler.

A job scheduler can run on one or more nodes in a cluster or any specific node in a cluster. To run multiple job schedulers simultaneously, configure the number of threads for the job scheduler thread pool by modifying the prconfig.xml file. The default value is 5, and the number of threads should equal the number of job schedulers running simultaneously.

Unlike queue processors, job schedulers decide whether a record requires a lock and whether it needs to commit records that use Obj-Save to update. If a job scheduler creates a case or opens a case with a lock and causes it to move to a new assignment or complete its life cycle, the job scheduler does not have to issue a commit.

Default job schedulers

Pega Platform provides three default job processors that can be useful in your application:

- Node cleaner

- Cluster and database cleaner

- Persist node and cluster state

Node cleaner

The node cleaner cleans up expired locks and outdated module version reports.

By default, the node cleaner job scheduler (pyNodeCleaner) runs the Code-pzNodeCleaner activity on all the nodes in the cluster.

Cluster and database cleaner

By default, the cluster and database job scheduler (pyClusterAndDBCleaner) runs the Code-.pzClusterAndDBCleaner activity on only one node in the cluster, once every 24 hours for housekeeping tasks. This job purges the following items:

- Older records from log tables

- Idle requestors for 48 hours

- Passivation data for expired requestors (clipboard cleanup)

- Expired locks

- Cluster state data that is older than 90 days.

Persist node and cluster state

pyPersistNodeState saves the node state on node startup. The system saves cluster state data once a day by using the pyPersistClusterState job scheduler.

The pzClusterAndDBCleaner job scheduler purges cluster state data that is older than 90 days.

Standard agent

Caution: Consider using a job scheduler or queue processor instead of an agent.

Standard agents are preferable when you have items in the queue for processing. With standard agents, you can focus on configuring the specific operations to perform. Pega Platform provides built-in features for error handling, queuing and dequeuing, and commits when using standard agents.

By default, standard agents run in the security context of the person who queues the task. This approach can be advantageous when users with different access groups use the same agent. Standard agents often apply to an application with many implementations that stem from a common framework or in default agents that Pega Platform includes. The access group setting on an agents rule applies to advanced agents only, which are not queued. To always run a standard agent in a given security context, switch the queued access group by overriding the System-Default-EstablishContext activity, and then invoke the setActiveAccessGroup() java method within that activity.

All nodes share queues. Using multiple standard agents on separate nodes to process the items in a queue can improve the throughput.

Note: There are several examples of default agents using the standard mode, such as the agent that processes service-level agreements (ServiceLevelEvents) in the Pega-ProCom ruleset.

Advanced agent

Use advanced agents when there is no requirement to queue and perform a reoccurring task. Advanced agents are also helpful when there is a need for more complex queue processing. When advanced agents process unqueued items, the advanced agent determines the work to perform. For example, if you need to generate statistics every midnight for reporting purposes, the output of a report definition can determine the list of items to process.

Note: Several default agents use the advanced mode, such as the agent for automatic column population (pxAutomaticColumnPopulation) in the Pega-ImportExport.

When an advanced agent uses queuing, all queuing operations occur in the agent activity.

Note: The default agent ProcessServiceQueue in the Pega-IntSvcs ruleset is an example of an advanced agent that processes queued items.

When running on a multi-node configuration, configure agent schedules so that the advanced agents coordinate their efforts. To coordinate agents, select Run this agent on only one node at a time and delay the next run of the agent across the cluster by a specified time period. These advanced settings are available on the Agent rule form.

Default agents

Default agents run in the system (similar to services configured to run in a computer operating system) with the initial installation of Pega Platform. Review and tune the agent configurations on a production system for the following reasons.

- There are default agents that are unnecessary for most applications because the agents implement legacy or seldom-used features.

- There are default agents that should not run in production.

- There are default agents that run at inappropriate times by default.

- There are default agents that run more frequently than needed or not frequently enough.

- There are default agents that run on all nodes by default but should run on only one node.

For example, by default, several agents run the Pega-DecisionEngine in the system. Disable these agents if decision management does not apply to the applications. Enable some agents only in a development or QA environment, such as the Pega-AutoTest agents. Some agents are designed to run on a single node in a multinode configuration.

For a complete review of agents and their configuration settings, see Agents and agent schedules. Because these agents are in locked rulesets, they cannot be modified. To change the configuration for these agents, update the agent schedules generated from the agents rule.

Check your knowledge with the following interaction:

This Topic is available in the following Module:

If you are having problems with your training, please review the Pega Academy Support FAQs.

Want to help us improve this content?