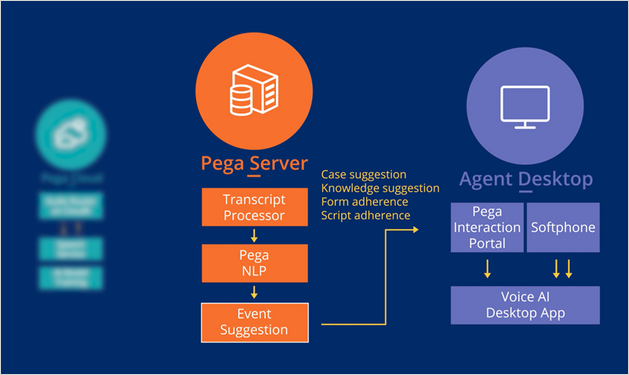

Voice AI architecture

This video describes the main components of the Pega Voice AI™ architecture.

Discover how voice data flows through the system.

Transcript

When a CSR receives or starts a call, the Interaction Portal sends an event to the Voice AI Desktop application.

Voice AI Desktop picks up audio streams and routes them to Audio Router, which then sends the audio to Speech Service for real-time transcription. Audio Router receives the transcripts back in real-time and temporarily caches them in a Redis database. Pega Server continuously polls Audio Router to fetch transcripts. The polling occurs every 1.5 seconds to ensure that the processing is as close to real time as possible.

Pega Server runs the transcripts through natural language processing (NLP) to extract topics and entities. Topics and entities drive the real-time suggestions that the CSR receives in the Interaction Portal.

Next are the components of the agent desktop.

Agent desktop

There are three distinct components that comprise the agent desktop.

The Interaction Portal is the primary work application for the CSR. In the Interaction Portal, CSRs can process interactions and cases for their customers. Interaction Portal receives the suggestions that Voice AI makes. Voice AI Desktop is installed on each CSR's computer and captures audio streams at the operating system layer. The final component is a softphone, which enables the telephony conversation between the CSR and the customer.

Voice AI Desktop does not require direct integration with the softphone. If you have Pega Call configured, Interaction Portal acts as the broker between the Computer Telephony Integration (CTI) layer and Voice AI Desktop.

When a CSR logs in to the Interaction Portal in Pega Customer Service, a WebSocket connection is initiated with Voice AI Desktop. When CTI events such as call connection or disconnection are detected, the Interaction Portal instructs Voice AI Desktop to start and stop the audio capture. Voice AI Desktop picks up both audio streams directly from the computer's operating system and transmits them to the Audio Router.

Audio Router service

Audio Router is a Pega Cloud®-based service. This service routes audio streams from Voice AI Desktop to Speech Service to obtain the transcripts.

The audio channel has a separate stream for the CSR and for the customer.

The Audio Router service receives audio streams, sends them to the Speech Service for transcription, and then caches the transcripts until Pega Server can fetch them.

Transcripts are cached for 5 minutes, then deleted, so there is no permanent storage of personal data.

The Audio Router service is maintained by the Pega Support team. Customers do not configure or maintain the Audio Router. The Pega Support team monitors the service through service-level agreements (SLAs) and reports.

Speech Service

In the architecture diagram, you see that Audio Router connects to Speech Service, and both modules live in Pega Cloud.

Speech Service receives the two audio streams from Audio Router, transcribes the audio into text, and returns the transcripts to Audio Router in real time. The transcripts are compatible with what NLP expects when it is looking for topics and entities.

Amazon Web Services (AWS) hosts Speech Service. Pega Support manages Speech Service for customers. Customers do not directly access Speech Service.

Pega Support can use the AI Model Training pipeline to improve transcription accuracy. For example, for an organization that provides medical services, Pega Support adds medical terms that train the AI model. The customer provides text or audio data, and Pega Support uses the data to train an existing AI model. The customer can immediately use the new model by updating a Config Set setting in Customer Service.

Pega Server

Pega Server uses three components to process the Voice AI transcripts and send information back to the Agent Desktop in real time. The three components are Transcript Processor, Pega NLP, and Event Suggestion.

Transcript Processor continuously polls the Audio Router cache for new transcripts. When it finds a new transcript, Transcript Processor brings the data flow into Pega Server and sends it to NLP.

NLP analyzes the data to identify topics and entities and then pushes suggestions to CSRs in real time in the Interaction Portal.

For example, if a customer calls to change their address, NLP can identify the Address Change topic and entities such as the street address and ZIP code.

The Event Suggestion component receives the topics and entities extracted by NLP, identifies related case types and knowledge articles, and sends suggested cases and articles to the Interaction Portal. A CSR who is in a live session with the customer sees the suggestions that the conversation prompts.

You can configure case types, knowledge articles, and topics in the Voice AI channel in Customer Service.

This Topic is available in the following Modules:

If you are having problems with your training, please review the Pega Academy Support FAQs.

Want to help us improve this content?